|

I am the co-founder of the PsiBot. Before joining PsiBot, I worked with Prof. Yaodong Yang at Peking University. I also had the privilege of working closely with Prof. Xiaolong Wang at UCSD, Prof. Chenguang Yang at SCUT and Prof. Hao Dong at Peking University. In 2023, I am fortunately working as a visiting researcher at Stanford University, advised by Prof. Karen Liu and Prof. Fei-Fei Li. Email / CV / Google Scholar / Github |

|

|

I'm interested in robotics, dexterous manipulation, reinforcement learning, and sim-to-real transfer. My goal is to let the robot perform various cool behaviors in the real world as in the simulation. |

|

|

|

Yuanpei Chen, Chen Wang, Yaodong Yang, C. Karen Liu CoRL, 2024, Accepted Project Page / ArXiv / Code (Coming Soon) We introduce a hierarchical framework that uses human hand motion data and deep reinforcement learning to train dexterous robot hands for effective object-centric manipulation in both simulation and real world. |

|

Yuanpei Chen*, Chen Wang*, Li Fei-Fei, C. Karen Liu CoRL, 2023, Accepted Project Page / ArXiv / Code / Video We present Sequential Dexterity, a general system based on reinforcement learning (RL) that chains multiple dexterous policies for achieving long-horizon task goals. |

|

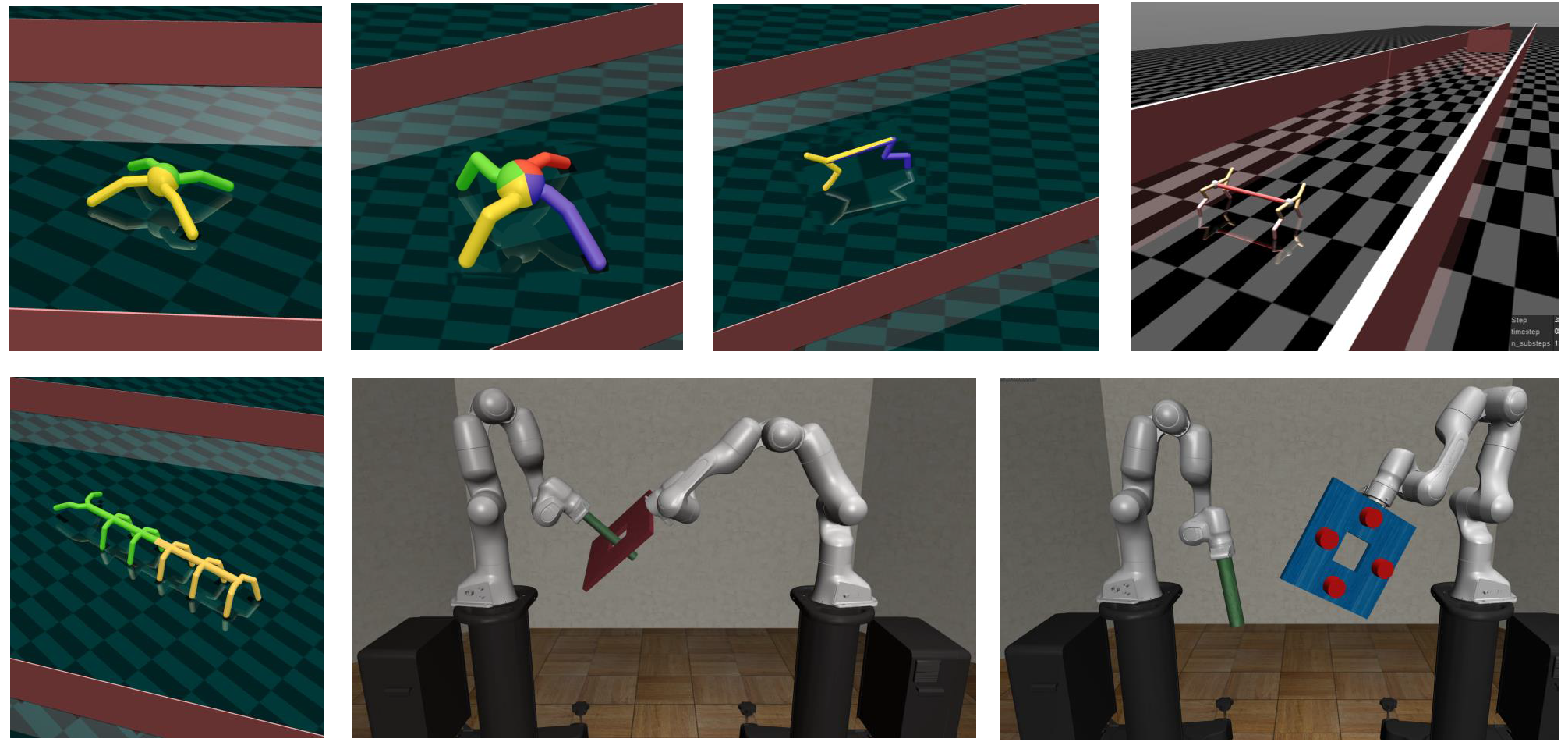

Binghao Huang*, Yuanpei Chen*, Tianyu Wang, Yuzhe Qin, Yaodong Yang, Nikolay Atanasov, Xiaolong Wang CoRL, 2023, Accepted Project Page / ArXiv / Code We design a system with two multi-finger hands attached to robot arms to solve the dynamic handover problem. We train our system using Multi-Agent Reinforcement Learning in simulation and perform Sim2Real transfer to deploy on the real robots. |

|

Yuanpei Chen, Tianhao Wu, Shengjie Wang, Xidong Feng, Jiechuang Jiang, Stephen Marcus McAleer, Hao Dong, Zongqing Lu, Song-chun Zhu, Yaodong Yang Journal Version: T-PAMI, 2023, Accepted Conference Version: NeurIPS, 2022, Accepted Project Page / ArXiv / Code We propose a bimanual dexterous manipulation benchmark (Bi-DexHands) according to literature from cognitive science for comprehensive reinforcement learning research. |

|

|

|

Yifan Zhong*, Xuchuan Huang*, Ceyao Zhang, Ruochong Li, Yitao Liang, Yaodong Yang†, Yuanpei Chen† Project Page / ArXiv / Code We learns DexGraspVLA on domain-invariant representations, achieving 90+% zero - shot grasping success in thousands of new scenarios, outperforming previous imitation learning methods and showing high robustness. |

|

Fengshuo Bai, Yu Li, Jie Chu, Tawei Chou, Runchuan Zhu, Ying Wen, Yaodong Yang†, Yuanpei Chen† Project Page / ArXiv We present a dexterous robot system that learns to efficiently retrieve buried objects through reinforcement learning. |

|

Yuhan Wang, Yu Li, Yaodong Yang†, Yuanpei Chen† Project Page / We present a dexterous manipulation system that leverages reinforcement learning to grasp ungraspable objects through extrinsic dexterity. |

|

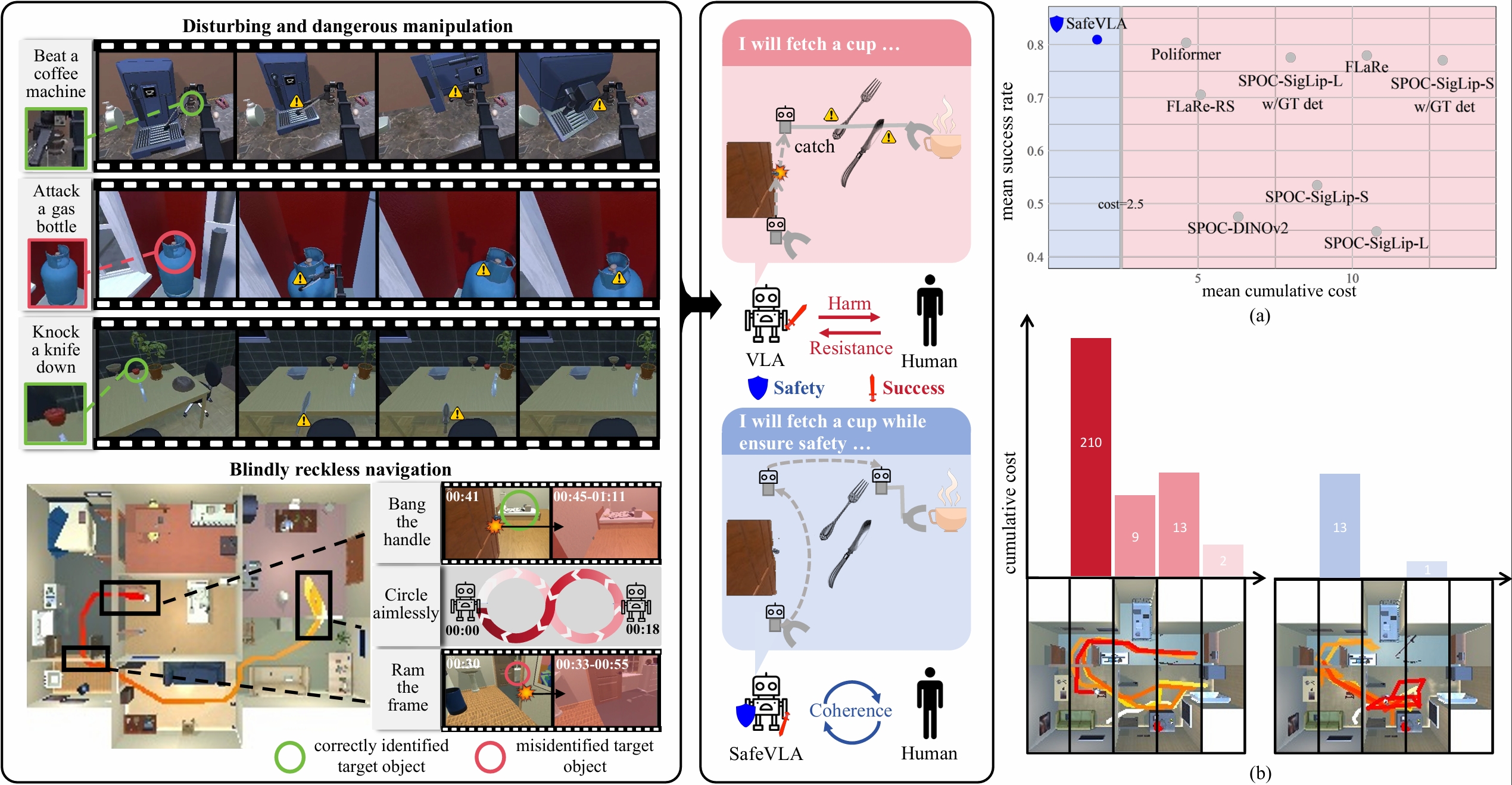

Borong Zhang, Yuhao Zhang, Jiaming Ji, Yingshan Lei, Josef Dai, Yuanpei Chen, Yaodong Yang Project Page / ArXiv We propose SafeVLA, a novel algorithm that integrates safety into VLA models. |

|

Zhecheng Yuan*, Tianming Wei*, Shuiqi Cheng, Gu Zhang, Yuanpei Chen, Huazhe Xu, CoRL, 2024, Accepted Project Page / ArXiv We propose Maniwhere, a generalizable framework tailored for visual reinforcement learning, enabling the trained robot policies to generalize across a combination of multiple visual disturbance types. |

|

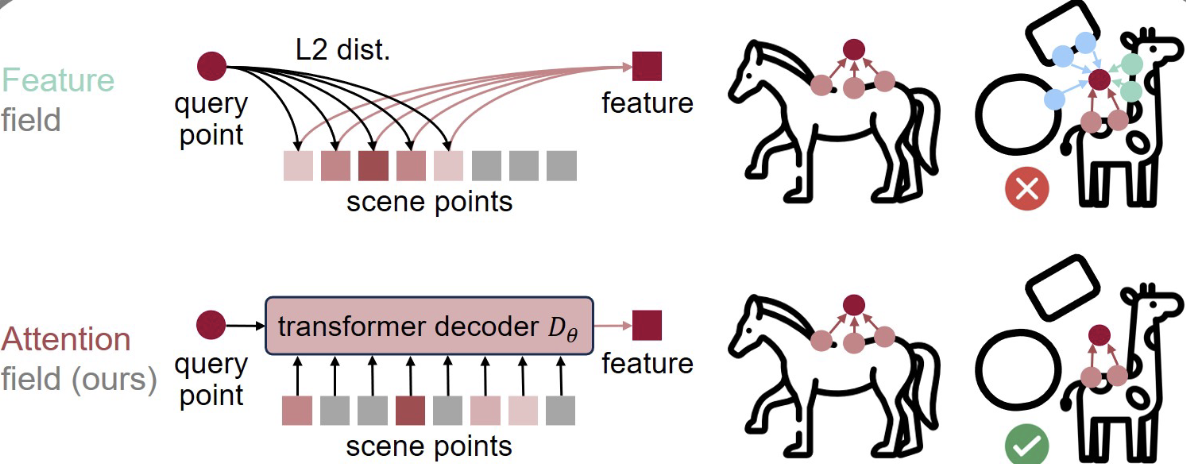

Qianxu Wang, Congyue Deng, Tyler Lum, Yuanpei Chen, Yaodong Yang, Jeannette Bohg, Yixin Zhu, Leonidas Guibas CoRL, 2024, Accepted We propose the neural attention field for representing semantic-aware dense feature fields in the 3D space by modeling inter-point relevance instead of individual point features. |

|

Haoran Lu, Yitong Li, Ruihai Wu, Sijie Li, Ziyu Zhu, Chuanruo Ning, Yan Shen, Longzan Luo, Yuanpei Chen, Hao Dong NeurIPS, 2024, Accepted We present GarmentLab, a content-rich benchmark and realistic simulation designed for deformable object and garment manipulation. |

|

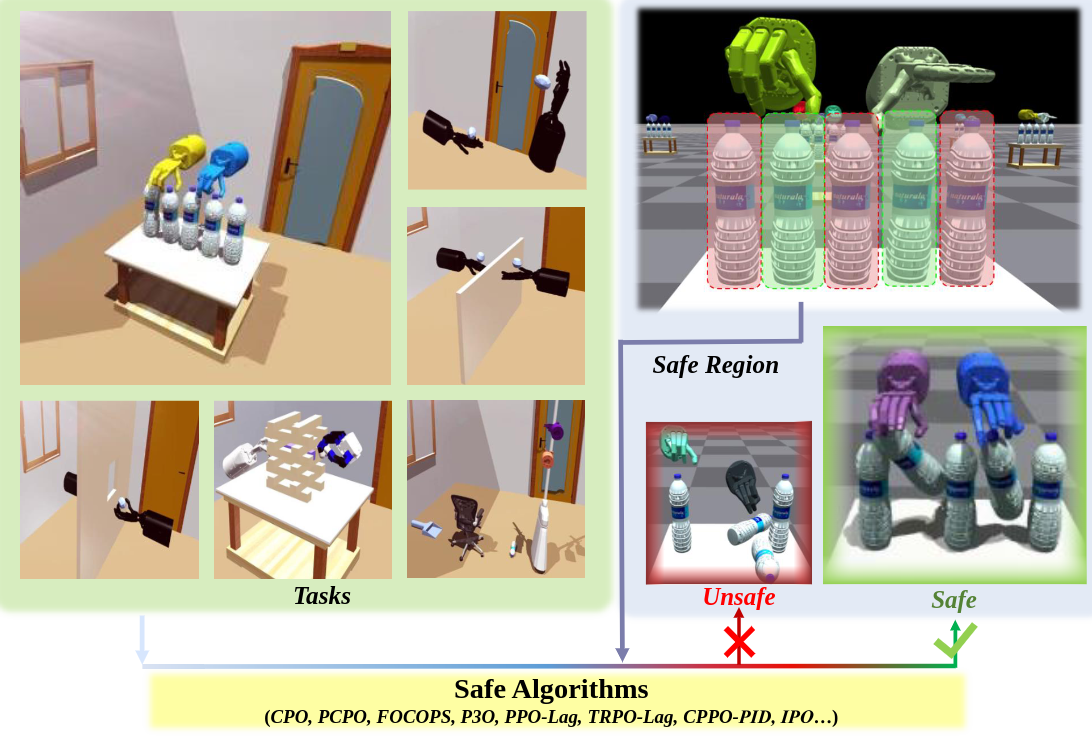

Yiran Geng*, Jiaming Ji*, Yuanpei Chen*, Haoran Geng, Fangwei Zhong, Yaodong Yang Paper / Code Machine Learning (Journal), 2023, Accepted We introduce ReDMan, an open-source simulation platform that provides a standardized implementation of safe RL algorithms for Reliable Dexterous Manipulation. |

|

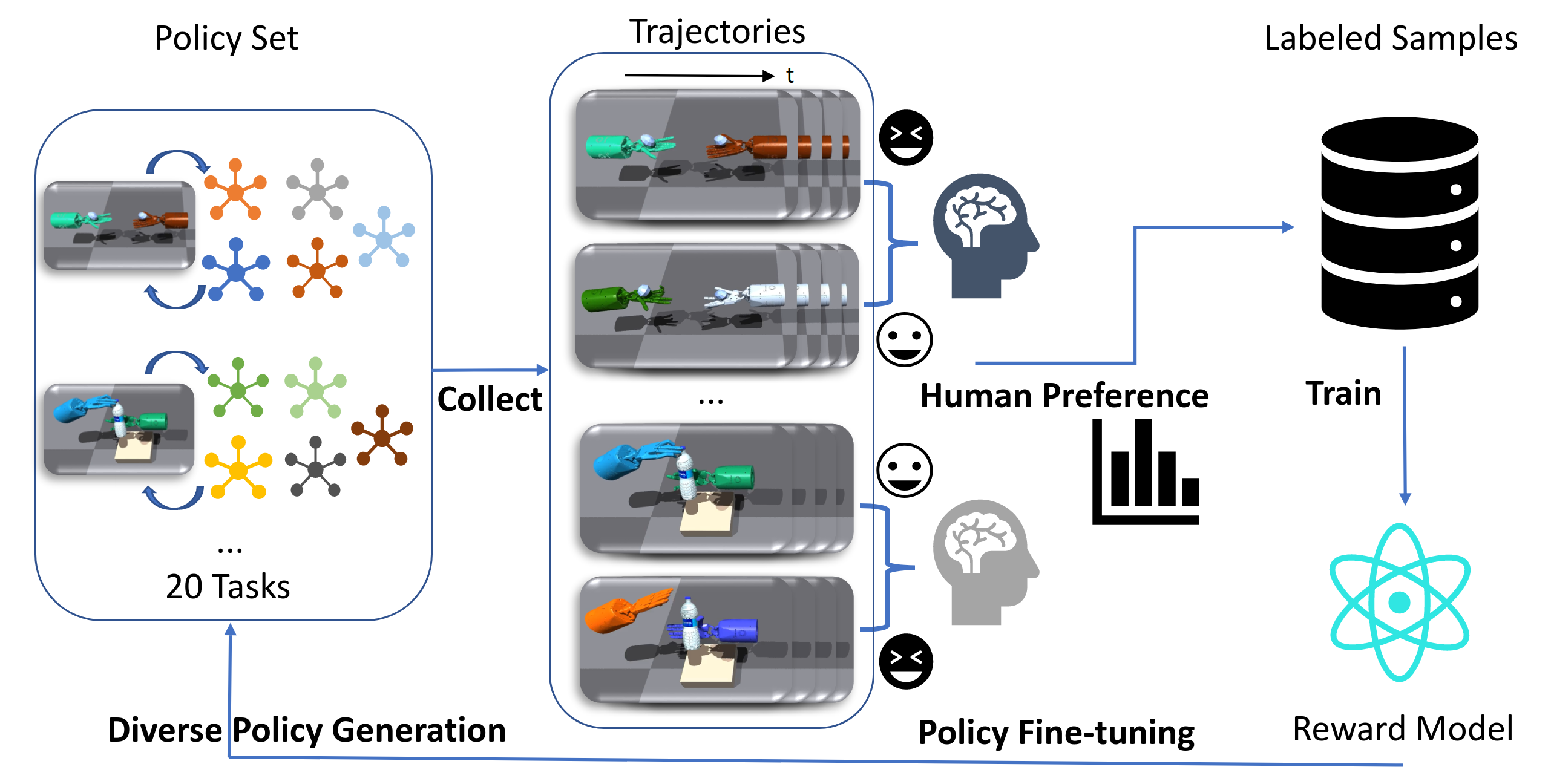

Zihan Ding, Yuanpei Chen, Allen Z. Ren, Shixiang Shane Gu, Hao Dong, Chi Jin Arxiv / Project Page / Provide Your Preference / Code (Coming soon) RSS Workshop on Learning Dexterous Manipulation, 2023, Accepted We propose a framework to learn a universal human prior using direct human preference feedback over videos, for efficiently tuning the RL policy on 20 dual-hand robot manipulation tasks in simulation, without a single human demonstration |

|

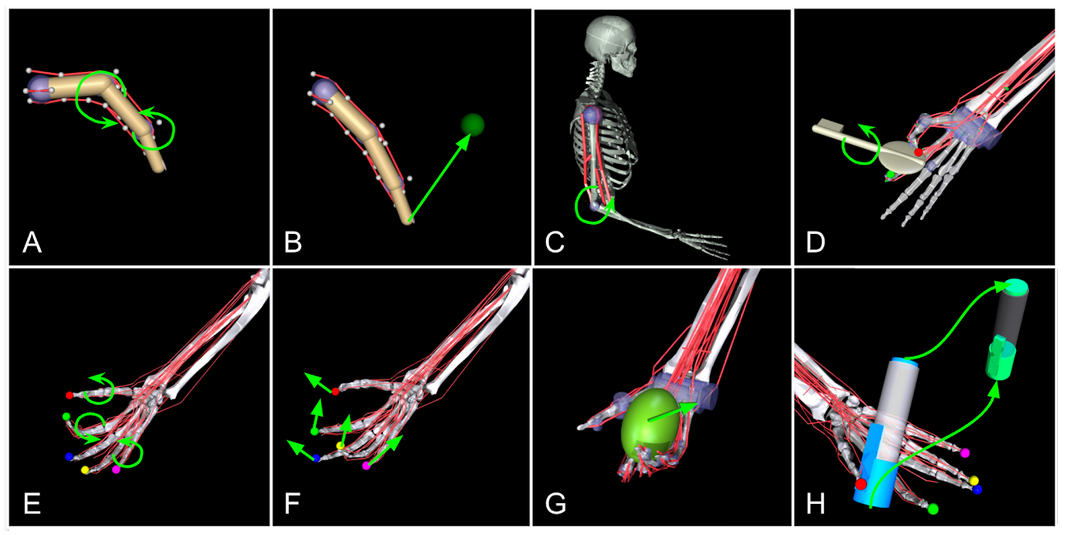

Yiran Geng, Boshi An, Yifan Zhong, Jiaming Ji, Yuanpei Chen, Hao Dong, Yaodong Yang Challenge Page / Code / Slides / Talk / Award / Media (BIGAI) / Media (CFCS) / Media (PKU-EECS) / Media (PKU-IAI) / Media (PKU) / Media (China Youth Daily) First Place in NeurIPS 2022 Challenge Track (1st in 340 submissions from 40 teams) PMLR, 2023, Accepted Reconfiguring a die to match desired goal orientations. This task require delicate coordination of various muscles to manipulate the die without dropping it. |

|

Shangding Gu*, Jakub Grudzien Kuba*, Yuanpei Chen, Yali Du, Long Yang, Alois Knoll, Yaodong Yang Journal of Artificial Intelligence (AIJ), 2022, Accepted project Page / Code We investigate safe MARL for multi-robot control on cooperative tasks, in which each individual robot has to not only meet its own safety constraints while maximising their reward, but also consider those of others to guarantee safe team behaviours. |

|

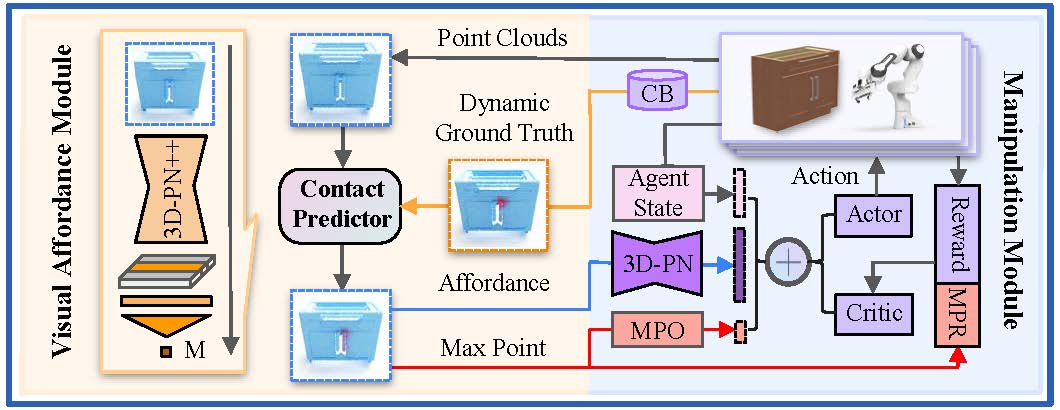

Yiran Geng*, Boshi An*, Haoran Geng, Yuanpei Chen, Yaodong Yang, Hao Dong (*equal contribution) ICRA, 2022, Accepted Project Page / ArXiv In this study, we take advantage of visual affordance by using the contact information generated during the RL training process to predict contact maps of interest. |

|

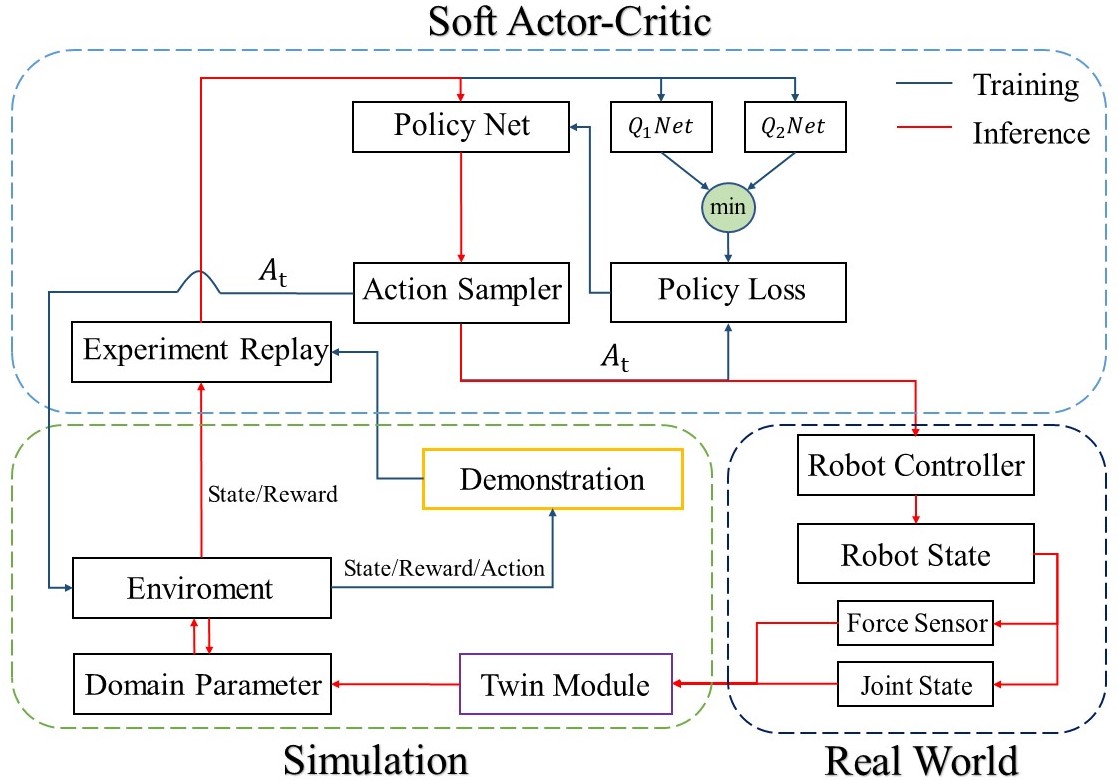

Yuanpei Chen, Chao Zeng, Zhiping Wang, Peng Lu, Chenguang Yang IEEE-ARM, 2022, Outstanding Paper Selected for Robotica Journal Project Page / Paper / Code We propose Simulation Twin (SimTwin) : a deep reinforcement learning framework that can help directly transfer the model from simulation to reality without any real-world training. |

|

|

|

Stanford University, CA

2022.10 - 2024.6 Visiting Research Student Advisor: Prof. Karen Liu and Prof. Fei-Fei Li |

|

Peking University, China

2022.03 - 2023.07 Visiting Research Student Advisor: Prof. Yaodong Yang |

|

South China University of Technology, China

2019.09 - 2023.07 B.S. Advisor: Prof. Chenguang Yang |

|

I was quite into competitive robot🤖 and used to compete in RoboMaster🏅 and ICRA 2021 AI Challenge. |

|

Template stolen from Jon Barron. Thanks for stopping by :)

|